The Dynamic Vision Sensor (DVS), developed at iniLabs and INI Sensors Group, represents a neuromorphic revolution in imaging technology. Unlike conventional CMOS sensors, the DVS operates on neuromorphic principles, mimicking the human retina’s ability to detect changes in brightness and motion in real-time. This article explores the unique features of the DVS, its working principles, and how it compares to other neuromorphic cameras in the market.

What Sets the DVS Apart from CMOS Sensors?

Traditional CMOS sensors capture visual information frame by frame, leading to high data redundancy and latency. In contrast, the DVS is an event-based sensor that only records changes in brightness at each pixel. Key differences include:

- Data Efficiency: While CMOS sensors generate data continuously, the DVS produces sparse outputs by capturing only dynamic changes, significantly reducing data volume.

- Low Latency: The DVS has a temporal resolution of 1 microsecond, enabling it to respond almost instantly to changes in a scene.

- High Dynamic Range: With a range of 120 dB, the DVS outperforms CMOS sensors in challenging lighting conditions.

A Neuromorphic Revolution, The DVS Low Latency

The Dynamic Vision Sensor (DVS) achieves microsecond-level temporal resolution, enabling near-instantaneous detection of changes in a scene. This ultra-low latency—orders of magnitude faster than traditional CMOS sensors—unlocks critical advantages for real-time systems, particularly in dynamic environments where split-second decisions are paramount. Below, we explore how this capability translates into tangible benefits across industries.

1. Immediate Response to High-Speed Motion

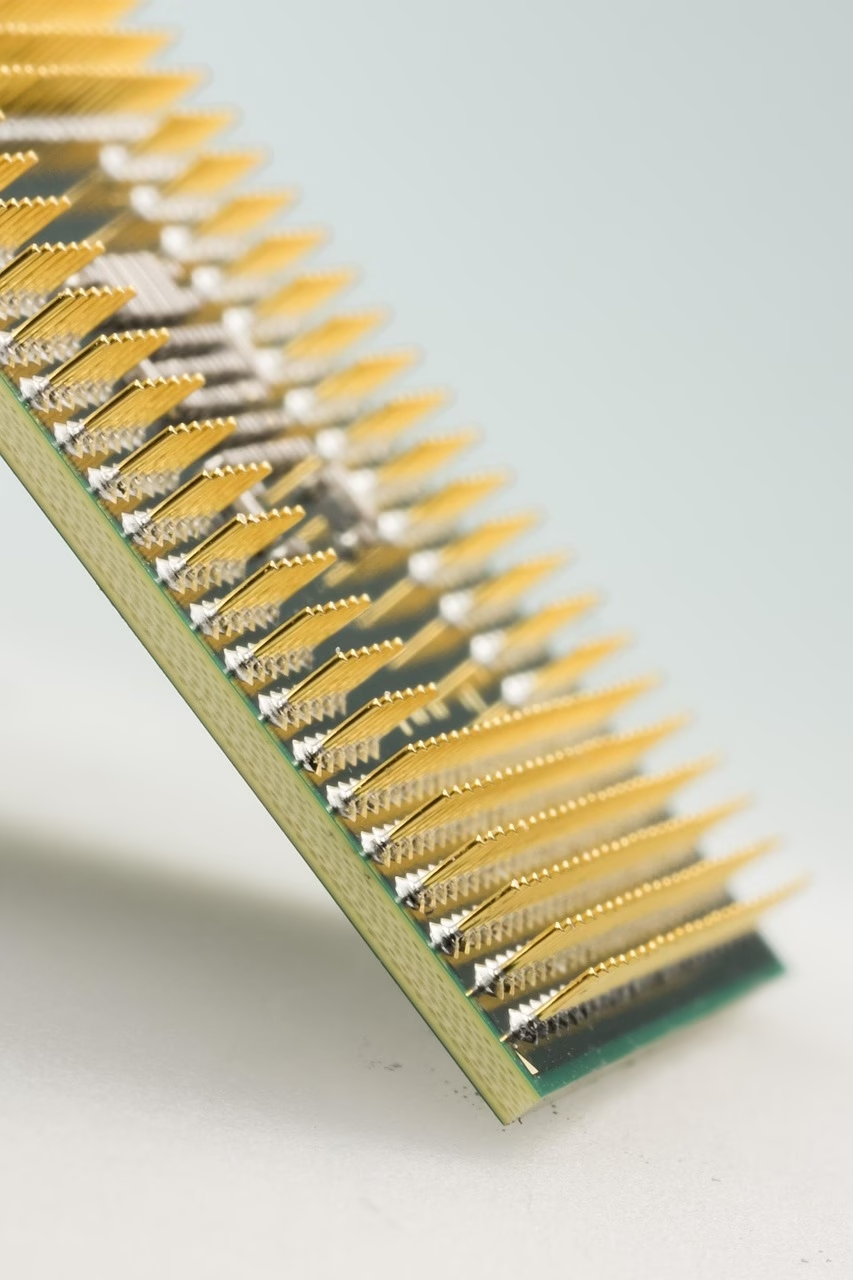

DVS pixels asynchronously detect brightness changes, bypassing the frame-based capture (e.g., 30–1000 FPS in CMOS). Events are transmitted continuously via the AER bus, eliminating inter-frame delays.

Applications:

- Robotics: Enables robots to react instantaneously to moving objects or obstacles, such as catching fast-moving items on a conveyor belt.

- Autonomous Vehicles: Detects pedestrians, cyclists, or sudden obstacles in microseconds, improving collision avoidance.

- Sports Analytics: Tracks high-speed motions (e.g., a tennis serve or baseball pitch) with unmatched temporal precision.

2. Reduced Computational Overhead

By transmitting only sparse event data (changes in pixels), the DVS minimizes redundant information processing. This reduces the time required for downstream algorithms to analyze data.

Applications:

- Drones: Allows lightweight processors to maintain real-time navigation in cluttered environments.

- Augmented Reality (AR): Enables seamless tracking of rapid hand gestures or object movements without lag.

3. Resilience to Motion Blur and Overexposure

Low latency ensures events are captured before motion blur or saturation occurs, even in high-speed or high-contrast scenarios.

Applications:

- Industrial Automation: Inspects fast-moving assembly lines without missing defects.

- Space Exploration: Tracks debris or celestial objects in extreme lighting conditions.

How Does a DVS Pixel Work?

Each pixel in the DVS operates independently and asynchronously. The process can be broken down as follows:

- Light Detection: A photodiode converts incoming light into an electrical current proportional to its intensity.

- Logarithmic Response: The pixel computes the logarithm of the intensity change, ensuring sensitivity across a wide dynamic range.

- Event Generation: When a change in brightness exceeds a predefined threshold, an “event” is triggered. The event contains:

- Timestamp (tt)

- Pixel coordinates (x,yx,y)

- Polarity (pp), indicating whether brightness increased or decreased.

- Noise Filtering: A feedback loop ensures that only significant changes are captured, reducing noise.

The Role of Bias Parameters in DVS Pixel Operation

Bias parameters in the Dynamic Vision Sensor (DVS) pixel are critical for tuning its sensitivity, noise tolerance, and temporal behavior. These analog voltage or current settings act as “control knobs” to optimize the pixel’s response to light changes, balancing speed, power consumption, and signal fidelity. Below, we dissect their roles and impact on the DVS’s event-based operation.

1. Photoreceptor Circuit Biases

The photoreceptor converts light into a voltage signal with a logarithmic response. Key biases include:

- Photodiode Bias (VPD): Controls the photodiode’s operating point, influencing sensitivity to light intensity. Higher bias increases responsiveness but risks overexposure.

- Log Compression Bias: Adjusts the logarithmic compression strength, enabling the pixel to handle high dynamic range (HDR) scenes.

2. Differentiator Circuit Biases

This stage detects temporal changes in brightness. Relevant parameters:

- Differentiator Gain (VDiff): Sets the amplification of the brightness change signal. Higher gain increases sensitivity to small changes but amplifies noise.

- Threshold Voltage (VTh): Determines the minimum brightness change required to trigger an event. Lower thresholds detect finer motions but increase false positives.

3. Refractory Period Control

After generating an event, the pixel enters a refractory period to avoid oscillation. Biases here include:

- Refractory Time Constant (τref): Governs the duration the pixel remains inactive post-event. Shorter periods allow faster response but risk noise; longer periods stabilize output.

4. Comparator Biases

The comparator generates ON/OFF events (polarity) based on brightness change direction:

- Hysteresis Voltage (VHyst): Prevents flickering by adding a dead zone between ON and OFF event thresholds.

- Comparator Offset Calibration: Fine-tunes symmetry between ON/OFF event detection.

Practical Implications of Bias Adjustments

| Bias Parameter | Effect of Increasing | Trade-Off |

|---|---|---|

| VTh | Reduces noise by ignoring small changes | Misses subtle motion |

| VDiff | Enhances sensitivity to slow-moving objects | Increases susceptibility to flicker |

| τref | Reduces event density in high-activity scenes | Limits temporal resolution |

| VHyst | Stabilizes output in fluctuating lighting | Delays detection of rapid polarity changes |

Why Bias Tuning Matters

- Adaptability: Biases let users tailor the DVS for specific scenarios (e.g., robotics vs. low-light surveillance).

- Power Efficiency: Lowering photoreceptor bias reduces power consumption at the cost of sensitivity.

- Noise Mitigation: Adjusting VTh and τref suppresses environmental noise (e.g., flickering lights).

Contrast with CMOS Sensors

Unlike CMOS sensors, which have fixed global exposure settings, DVS biases enable pixel-level configurability. This granular control is unique to neuromorphic vision systems and aligns with biological principles seen in retinal adaptation.

Bias parameters are the backbone of DVS customization, allowing engineers to optimize performance for diverse applications. Competitors like Prophesee and Sony’s neuromorphic sensors use similar tuning mechanisms but differ in bias granularity and default configurations. Mastery of these parameters is essential for unlocking the DVS’s full potential in high-speed, low-power, and HDR environments.

Event Transmission via Address-Event Representation (AER)

The generated events are transmitted through an Address-Event Representation (AER) bus. This asynchronous communication protocol sends each event as a packet containing its timestamp and pixel address. The AER bus ensures efficient data transfer by prioritizing active pixels while ignoring inactive ones, further enhancing data sparsity and processing speed.

Energy Efficiency in Edge Computing

The AER bus transmits events only when changes occur, minimizing power consumption. This is critical for battery-operated devices.

Applications:

- Wearables: Supports always-on gesture control for smart glasses or AR headsets.

- Wildlife Monitoring: Enables long-term deployment in remote areas with limited power.

Comparison to Frame-Based Systems

| Scenario | CMOS Camera (30 FPS) | DVS Camera |

|---|---|---|

| Latency per “frame” | ~33 ms | 1–10 µs |

| Data Volume (1 sec) | 30 full frames | Sparse event stream |

| Motion Blur | Likely | Eliminated |

Conclusion: A Neuromorphic Revolution for Real-Time Systems

The DVS’s low latency addresses critical bottlenecks in applications requiring instantaneous feedback, from autonomous machines to interactive technologies. Competitors like Prophesee’s Metavision sensors and Sony’s IMX636 also leverage event-based vision but differ in resolution, power efficiency, or hybrid capabilities. However, the DVS remains a benchmark for latency-critical use cases, solidifying its role in the next generation of responsive, intelligent systems.

By eliminating the limitations of frame-based imaging, the DVS redefines what’s possible in real-time perception.