The robotics industry stands at a critical juncture in visual perception technology. As autonomous systems become increasingly sophisticated, the debate between event-based and frame-based vision sensors has intensified, with each approach offering distinct advantages for different applications. Understanding the performance characteristics, computational requirements, and practical limitations of these competing technologies is essential for engineers, researchers, and organizations investing in next-generation robotic systems.

Understanding the Fundamental Difference

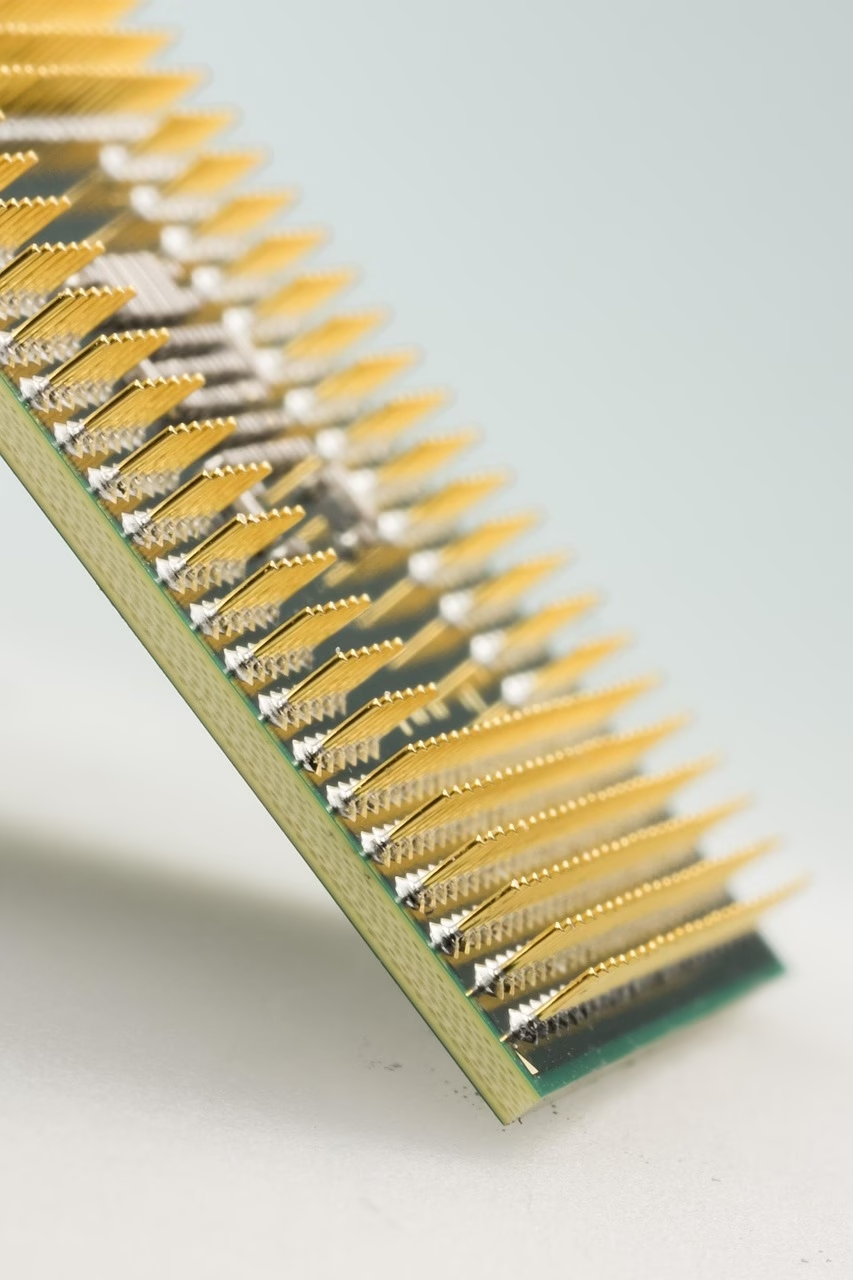

Frame-based vision sensors, the technology behind conventional cameras, capture images at fixed intervals—typically 30, 60, or 120 frames per second. These sensors read out the entire pixel array synchronously, creating a complete snapshot of the visual scene at each timestep. This approach has dominated computer vision for decades, benefiting from mature algorithms, extensive software libraries, and widespread industry adoption.

Event-based vision sensors, also known as neuromorphic cameras or dynamic vision sensors, operate on an entirely different principle. Rather than capturing full frames at regular intervals, these biologically-inspired sensors detect changes in brightness at each pixel independently and asynchronously. When a pixel detects a brightness change exceeding a predefined threshold, it immediately generates an “event” containing the pixel location, timestamp, and polarity of the change. This results in a sparse, asynchronous stream of data that captures motion and temporal changes with microsecond precision.

Temporal Resolution and Latency Performance

One of the most significant advantages of event-based sensors emerges in temporal resolution. While high-end frame-based cameras operate at 120-240 frames per second, event-based sensors achieve effective temporal resolutions exceeding 10,000 frames per second equivalent, with individual pixel response times in the microsecond range. This dramatic difference translates directly into reduced latency for robotic systems.

In practical benchmarking scenarios, this temporal advantage proves transformative for high-speed robotics applications. Consider a drone navigating through a cluttered environment at high velocity. Frame-based systems introduce inherent latency between visual events and their detection, typically 8-16 milliseconds even with optimized processing pipelines. Event-based sensors can detect and respond to visual changes within 1-2 milliseconds, enabling faster reaction times that can mean the difference between successful navigation and collision.

Motion blur represents another critical limitation of frame-based sensors that event-based systems elegantly sidestep. When objects move rapidly across the visual field, frame-based cameras integrate light over the entire exposure period, creating blurred images that degrade feature detection and tracking performance. Event-based sensors, by their nature, don’t suffer from motion blur—they detect changes instantaneously, maintaining clear visual information even during rapid motion.

Power Consumption and Computational Efficiency

The architectural differences between these sensor types create profound implications for power consumption and computational requirements. Frame-based sensors continuously read out millions of pixels regardless of whether the scene contains useful visual information, consuming significant power in both the sensor itself and downstream processing systems.

Event-based sensors demonstrate remarkable efficiency by transmitting data only when visual changes occur. In static or slowly-changing scenes, event cameras can reduce data rates by factors of 100 to 1000 compared to frame-based equivalents. This sparsity directly translates to reduced power consumption—benchmarks show event-based systems consuming 10-100 times less power than frame-based systems in typical robotic scenarios.

For battery-powered robotics applications, this efficiency advantage proves crucial. A quadcopter equipped with event-based vision can extend flight time by 20-40 percent compared to frame-based systems with equivalent processing capabilities. For ground robots operating in environments with limited charging infrastructure, event-based sensors enable longer operational periods and reduced downtime.

The computational efficiency extends beyond the sensor itself. Processing asynchronous events requires different algorithmic approaches than frame-based computer vision, but these event-driven algorithms often achieve superior computational efficiency. Modern neuromorphic processors designed specifically for event-based data can perform visual processing tasks with orders of magnitude less energy than conventional processors handling frame-based data.

Dynamic Range and Low-Light Performance

Dynamic range—the ratio between the brightest and darkest light levels a sensor can simultaneously capture—represents another area where event-based sensors demonstrate significant advantages. Conventional frame-based sensors typically offer 60-80 decibels of dynamic range, struggling in high-contrast environments where bright and dark regions coexist.

Event-based sensors achieve dynamic ranges exceeding 120 decibels, approaching the performance of the human visual system. This capability proves invaluable for robotics applications in uncontrolled lighting conditions. A robot transitioning from bright outdoor sunlight into a dark warehouse doesn’t experience the temporary blindness that plagues frame-based systems—event-based sensors continue detecting visual features throughout the transition.

Low-light performance further differentiates these technologies. Frame-based sensors must either extend exposure time (reducing frame rate) or amplify sensor gain (introducing noise) to operate in dim conditions. Event-based sensors detect relative brightness changes regardless of absolute light levels, maintaining high temporal resolution even in near-darkness. Benchmarks demonstrate event-based systems successfully detecting and tracking objects in lighting conditions where frame-based cameras produce unusable images.

Algorithm Maturity and Development Ecosystem

Despite their impressive performance characteristics, event-based sensors face significant challenges in algorithm maturity and software ecosystem development. The computer vision community has invested decades in developing sophisticated algorithms for frame-based imagery—object detection, semantic segmentation, visual SLAM, and countless other techniques refined through extensive research and industrial application.

Event-based vision requires fundamentally different algorithmic approaches. While researchers have made significant progress developing event-based algorithms for feature tracking, optical flow estimation, and object recognition, the ecosystem remains nascent compared to frame-based computer vision. This maturity gap creates practical challenges for robotics engineers seeking to implement event-based solutions.

Transfer learning and pre-trained models—cornerstones of modern computer vision—present particular challenges for event-based systems. The vast libraries of pre-trained neural networks for frame-based vision cannot directly process asynchronous event streams. Researchers have developed techniques for converting events to frame-like representations or training networks directly on event data, but these approaches haven’t achieved the same level of maturity and performance as their frame-based counterparts.

Hybrid Approaches and Practical Implementation

Recognizing the complementary strengths of both technologies, many robotics researchers advocate for hybrid approaches combining event-based and frame-based sensors. Event-based sensors excel at detecting motion, temporal changes, and high-speed dynamics, while frame-based cameras provide rich spatial information, texture details, and compatibility with established algorithms.

Several commercially available sensors now integrate both modalities in a single package. These hybrid systems enable robotics applications to leverage event-based data for rapid reaction and motion detection while utilizing frame-based imagery for detailed scene understanding and object recognition. Benchmarking studies consistently show hybrid approaches outperforming either technology alone across diverse robotic tasks.

The practical implementation of event-based sensors in production robotics systems requires careful consideration of hardware interfaces, data synchronization, and calibration procedures. Event-based sensors typically output data through specialized interfaces designed for high-bandwidth asynchronous streams, necessitating different hardware architectures than frame-based systems. Software frameworks for event-based vision, while improving rapidly, require specialized expertise that many robotics organizations are still developing.

Application-Specific Performance Considerations

The relative performance of event-based versus frame-based sensors varies dramatically across different robotic applications. For high-speed manipulation tasks, such as robotic ping-pong or ball catching, event-based sensors demonstrate clear superiority—their microsecond temporal resolution enables tracking fast-moving objects that appear as blurs to conventional cameras.

Autonomous navigation presents a mixed picture. Event-based sensors excel at detecting obstacles during rapid motion, providing robust performance in dynamic environments. However, frame-based sensors often perform better for static scene understanding, texture-based localization, and detailed obstacle classification. Many modern autonomous vehicles and mobile robots employ both sensor types, using each where it provides the greatest advantage.

For industrial inspection and quality control, frame-based sensors typically remain the preferred choice. These applications prioritize spatial resolution and detailed texture analysis over temporal performance, playing to the strengths of conventional cameras. The mature algorithm ecosystem for frame-based defect detection and measurement further cements their position in industrial settings.

The Future Trajectory

The trajectory of vision sensor technology in robotics points toward increasing adoption of event-based sensors, particularly as algorithms mature and hardware costs decline. Event-based sensors currently cost 2-5 times more than comparable frame-based cameras, but economies of scale and manufacturing improvements are narrowing this gap.

Neuromorphic computing architectures specifically designed to process event-based data promise to further amplify the efficiency advantages of these sensors. As these specialized processors become more widely available, the computational benefits of event-based vision will become increasingly compelling for power-constrained robotics applications.

The ultimate outcome likely involves continued coexistence rather than replacement. Frame-based sensors will maintain their position in applications prioritizing spatial resolution, algorithm maturity, and detailed scene understanding. Event-based sensors will expand into domains where temporal resolution, power efficiency, and dynamic range prove decisive. Hybrid systems combining both modalities will increasingly become the standard for high-performance robotics applications requiring both rapid reaction and detailed perception.

Conclusion

Benchmarking event-based versus frame-based vision sensors reveals complementary technologies rather than direct competitors. Event-based sensors offer revolutionary advantages in temporal resolution, power efficiency, and dynamic range that prove transformative for high-speed, power-constrained robotics applications. However, frame-based sensors maintain significant advantages in algorithm maturity, spatial resolution, and ecosystem support that keep them essential for many robotic tasks.

The robotics community’s path forward involves understanding the nuanced strengths of each technology and deploying them where they provide maximum value. As event-based algorithms mature and hybrid systems become more sophisticated, robots will gain unprecedented visual capabilities combining the millisecond reaction times of neuromorphic sensors with the detailed scene understanding of conventional cameras. This convergence promises to unlock new frontiers in robotic autonomy, from high-speed aerial navigation to delicate manipulation tasks requiring both speed and precision.