The DYNAP Neuromorphic Processor

Neuromorphic processor represents a paradigm shift in computation, drawing inspiration from the human brain. This biologically inspired approach diverges from traditional von Neumann architectures by embracing key characteristics such as massive parallelism, event-driven processing, and remarkably low power consumption. The increasing demands of modern artificial intelligence, particularly in edge computing scenarios where resources are constrained and real-time responsiveness is critical, have underscored the limitations of conventional computing paradigms. This has led to a growing recognition of neuromorphic processor’s potential to overcome these challenges by offering more energy-efficient and biologically plausible solutions.

At the forefront of this innovative field stands the Institute of Neuroinformatics (INI), a pioneering research institution established in 1995 as a joint venture between the University of Zurich and ETH Zurich. INI’s core mission is to unravel the fundamental principles governing neural computation and to translate these insights into the design and implementation of intelligent artificial systems. A significant outcome of INI’s sustained research efforts is the development of the Dynamic Neuromorphic Asynchronous Processor (DYNAP) family, a groundbreaking contribution to the landscape of neuromorphic hardware. This family of processors embodies the principles of brain-inspired computation and represents a significant step forward in creating systems capable of performing complex tasks with high efficiency. The DYNAP family encompasses several distinct flavors, each tailored for specific computational demands. These include the general-purpose DYNAP-SE, the DYNAP-ROLLS with its integrated on-chip learning capabilities, and the DYNAP-CNN, which is specifically optimized for processing convolutional neural networks. The emergence of neuromorphic hardware like DYNAP signifies a crucial step towards realizing computing solutions that not only mimic the brain’s efficiency and speed but are also inherently suited for applications requiring continuous, real-time processing at the network’s edge. This development is particularly relevant given the ever-increasing volume of sensory data and the need for localized intelligence in various applications. INI’s long-standing presence in the field of neuromorphic engineering positions the DYNAP processor as a product of extensive expertise and iterative refinement across its various iterations. This sustained research effort underscores the commitment to advancing the state-of-the-art in brain-inspired computing.

Core Principles of the DYNAP Processor

The DYNAP processor family operates on fundamental principles that distinguish it from conventional processors. A key aspect is its event-driven nature, where computations are not dictated by a global clock but are instead triggered by discrete events, known as spikes. This approach mirrors the communication mechanism in biological nervous systems, where neurons communicate through sparse, asynchronous spikes. Complementing this is the asynchronous operation of the DYNAP architecture, where different processing units on the chip operate independently, responding to the arrival of these events. This inherent parallelism allows for efficient processing of complex tasks by distributing the computational load across multiple units that are active only when necessary.

The event-driven and asynchronous operation of DYNAP confers significant advantages, particularly in terms of power efficiency. Unlike traditional systems that consume power continuously, even when idle, DYNAP processors only expend energy when processing incoming events. This results in sparse activity and a substantial reduction in overall energy consumption, making them ideal for power-constrained applications. Furthermore, the event-driven paradigm facilitates low latency in processing. Information is processed and transmitted almost instantaneously upon the occurrence of relevant changes in the input, avoiding the delays associated with clocked, frame-based systems. This is particularly crucial for real-time applications where timely responses are essential. The design of DYNAP, with its event-driven and asynchronous nature, directly reflects the information processing mechanisms found in biological nervous systems. This strong bio-inspired design philosophy aims to replicate the remarkable efficiency and speed observed in neural computation. For instance, the “always-on” capability highlighted for DYNAP-CNN is a direct consequence of its event-driven architecture. By only consuming power when there is relevant input, it avoids the energy waste of continuous computation, making it well-suited for applications that require continuous monitoring and immediate response to changes in the environment.

Internal Architecture of the DYNAP Chip

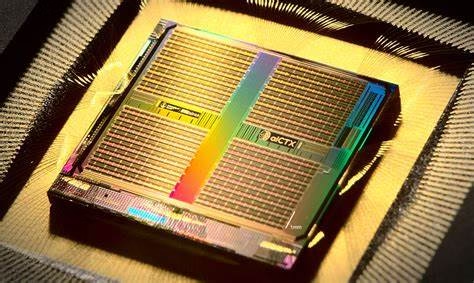

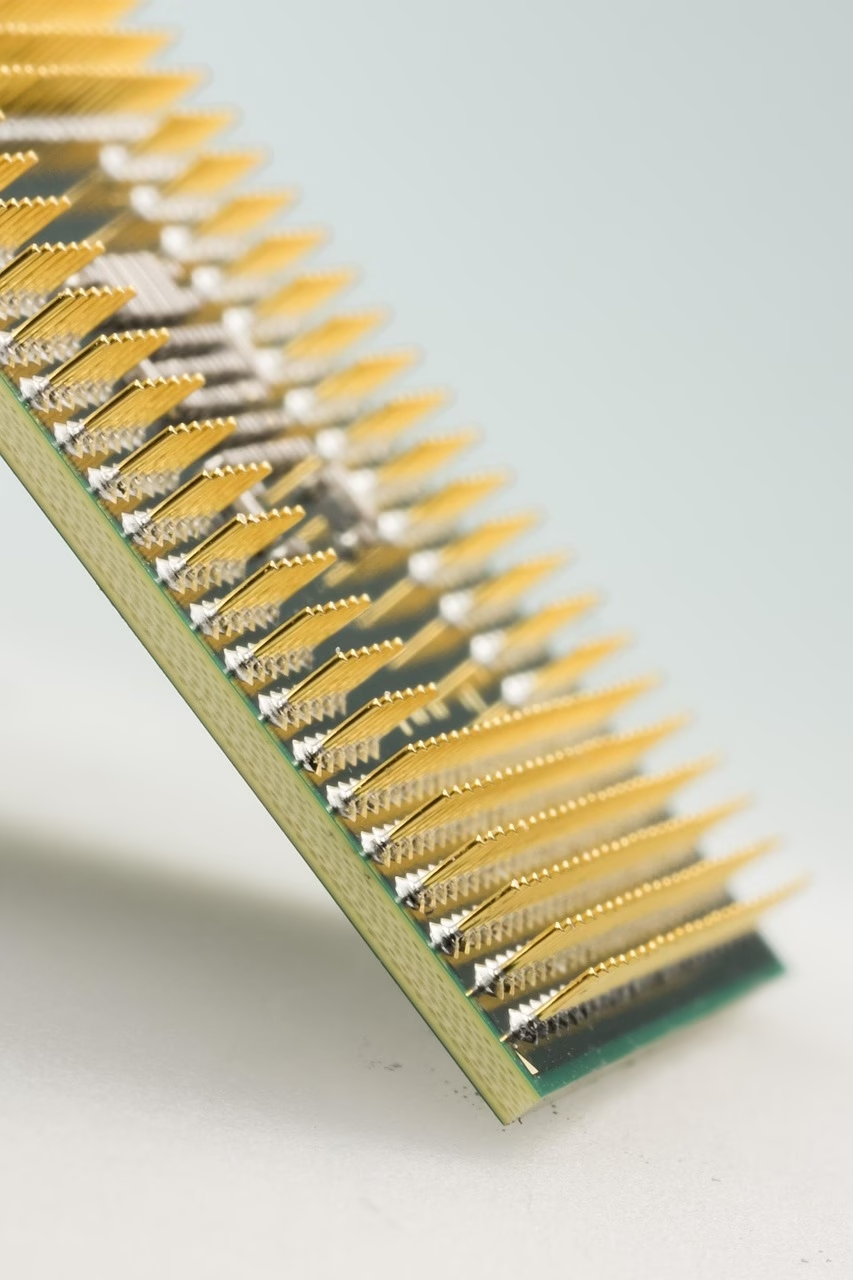

A typical DYNAP chip features a multi-core architecture, housing multiple neural cores on a single integrated circuit. For example, the DYNAP-SE2 variant incorporates four such cores, each containing an array of 256 neurons. These neural cores serve as the fundamental computational units within the DYNAP architecture, comprising analog neurons and their associated circuitry. The analog nature of these circuits is crucial for achieving low power consumption and emulating the complex dynamics of biological neurons with a high degree of realism.

Integral to the functionality of each neural core are memory blocks that facilitate connectivity and configuration. Content-Addressable Memory (CAM) is employed to store information about pre-synaptic connections, effectively holding the “tags” of neurons that a particular neuron is listening to. In some implementations, the CAM might also store synaptic weights or rules governing synaptic plasticity. Static RAM (SRAM) complements the CAM by storing receiving event configurations and other operational parameters for the neurons within the core.

Communication both within and between DYNAP chips relies on the Address-Event Representation (AER) protocol. In this scheme, spikes are not transmitted as direct voltage or current signals but rather as “events” that include the address or identifier of the spiking neuron. These address events are transmitted asynchronously, allowing for flexible and efficient routing of information across the chip and to other interconnected DYNAP chips. The architecture of DYNAP is fundamentally mixed-signal, integrating both analog and digital circuits. Analog circuits, operating in the subthreshold regime to minimize power consumption, are responsible for implementing the intricate dynamics of neurons and synapses, thus achieving biological realism. Conversely, digital circuits handle essential tasks such as spike routing, configuration of the chip’s parameters, and communication using the AER protocol. Furthermore, DYNAP chips typically include an on-chip bias generator, which is used to precisely tune the parameters of the analog circuits, allowing for customization of neuronal and synaptic behavior.

The multi-core structure and the AER-based communication infrastructure inherent in DYNAP architectures enable a high degree of scalability. This allows for the construction of larger and more sophisticated Spiking Neural Networks by seamlessly interconnecting multiple DYNAP chips. The division of labor between analog computation for emulating neural dynamics and a digital infrastructure for managing routing and control is a key design principle. This approach effectively leverages the strengths of both analog and digital domains, resulting in a system that is both energy-efficient and highly flexible in terms of configuring diverse network topologies.

Table 1: General DYNAP Architecture Components

| Component Name | Function | Implementation |

| Neural Cores | Fundamental computational units | Analog |

| Neurons | Processing units that receive and transmit spikes | Analog |

| Synapses | Connections between neurons, mediating signal transmission | Analog |

| CAM | Stores pre-synaptic connection information (neuron tags) | Digital |

| SRAM | Stores receiving event configurations and parameters | Digital |

| AER | Protocol for inter-neuron and inter-chip communication | Mixed-signal |

| Bias Generator | Tunes parameters of analog circuits | Analog |

Implementation of Spiking Neural Networks (SNNs) on DYNAP

Spiking Neural Networks, composed of interconnected neurons that communicate via discrete spikes, find a natural implementation on the DYNAP hardware platform. The architecture of DYNAP is specifically designed to support the event-driven nature of SNNs, allowing for efficient mapping of neural network structures onto the physical resources of the chip.

A primary neuron model implemented across the DYNAP family, particularly in the DYNAP-SE variant, is the Adaptive Exponential Integrate-and-Fire (AdExpIF) model. This model captures essential neuronal dynamics through a set of differential equations that describe the evolution of the neuron’s membrane potential and an adaptation variable. A key feature of the AdExpIF model is the inclusion of an exponential term that governs the rapid depolarization leading to spike generation. The model also incorporates adaptation mechanisms, allowing neurons to adjust their firing rate in response to sustained stimulation, a crucial property observed in biological neurons.

Synaptic interactions in SNNs implemented on DYNAP are typically modeled using the Differential Pair Integrator (DPI) log-domain filter. This analog circuit emulates the behavior of biological synapses by integrating incoming spike events over time, producing a post-synaptic current that influences the membrane potential of the receiving neuron. The DYNAP platform supports several types of synapses, including AMPA and NMDA (both excitatory), as well as GABA and SHUNT (both inhibitory). These different synapse types allow for the implementation of diverse network dynamics, mirroring the complex interplay of excitation and inhibition in biological neural circuits.

For certain DYNAP variants, most notably DYNAP-ROLLS, mechanisms for synaptic plasticity and learning rules are integrated directly onto the chip. Spike-Timing-Dependent Plasticity (STDP) is a key learning rule often implemented, where the strength of a synapse is modified based on the precise timing of pre- and post-synaptic spikes. This enables the network to learn temporal patterns and adapt its behavior over time without requiring external intervention.

The processing of information within an SNN on DYNAP follows an event-driven flow. Neurons remain largely inactive until they receive input spikes from connected pre-synaptic neurons. Upon receiving an event, a neuron updates its internal state (e.g., membrane potential) and may, in turn, generate an output spike if its membrane potential crosses a certain threshold. This spike is then transmitted as an AER event to its post-synaptic targets, continuing the chain of event-driven computation across the network. The selection of the AdExpIF neuron model for implementation on DYNAP represents a strategic balance between achieving biological realism and maintaining computational efficiency. This model’s ability to reproduce a wide range of neuronal firing patterns and capture complex temporal dynamics makes it suitable for both emulating biological neural circuits and developing sophisticated artificial intelligence applications. Similarly, the adoption of the DPI synapse model allows DYNAP to accurately simulate the temporal aspects of synaptic interactions, including variations in time constants and the diverse effects mediated by different neurotransmitters. This contributes significantly to the biological plausibility and computational power of the platform.

DYNAP-SE: A Versatile Neuromorphic Platform

The DYNAP-SE variant (including both DYNAP-SE1 and its successor, DYNAP-SE2) stands out as a highly versatile neuromorphic platform within the DYNAP family. The DYNAP-SE2 architecture, for instance, features 1024 Adaptive Exponential Integrate-and-Fire (AdExpIF) neurons distributed across four independently configurable neural cores, with each neuron receiving input from up to 64 synapses. DYNAP-SE1 shares a similar core structure. A key feature of DYNAP-SE is its configurable recurrent connectivity, which enables the creation of intricate network topologies suitable for a wide range of computational tasks. The presence of an on-chip bias generator allows for precise tuning of the analog circuit parameters, offering a high degree of flexibility in shaping the behavior of neurons and synapses. The platform supports four distinct types of synapses: AMPA, mediating fast excitatory post-synaptic potentials; NMDA, responsible for slower excitatory potentials and voltage-dependent gating; GABA, inducing inhibitory post-synaptic potentials; and SHUNT, providing shunting inhibition.

Given its flexibility and rich set of features, DYNAP-SE has found extensive use in various research applications. These include the development of sophisticated robotics control systems, such as those for achieving smooth trajectory interpolation in robotic arms. It has also been employed in sensory processing tasks, notably in tactile sensing applications aimed at recognizing and classifying object shapes based on tactile input. Furthermore, DYNAP-SE’s low power consumption makes it an attractive platform for prototyping solutions in low-power edge computing scenarios. The platform also serves as a valuable tool for fundamental neuroscience research, enabling the emulation of biologically realistic neural circuits to study neural dynamics and computational principles. The versatility of DYNAP-SE, characterized by its configurable parameters and support for a diverse array of synapse types , establishes it as an indispensable tool for researchers seeking to prototype a wide spectrum of spiking neural network architectures and investigate various computational models. Complementing the hardware is the availability of a dedicated simulator for DYNAP-SE. This simulator plays a crucial role in accelerating the development and deployment of SNNs on the hardware by allowing researchers to design, train, and rigorously test their networks within a simulated environment before committing them to the physical chip.

DYNAP-ROLLS: Enabling On-Chip Learning and Adaptation

The DYNAP-ROLLS processor distinguishes itself within the DYNAP family through its primary feature: integrated on-chip learning capabilities facilitated by plastic synapses. Architecturally, DYNAP-ROLLS comprises 256 silicon neurons and a substantial 128,000 plastic synapses, in addition to 256,000 programmable synapses, offering a rich substrate for implementing adaptive neural networks. The learning mechanisms supported by DYNAP-ROLLS include Spike-Timing-Dependent Plasticity (STDP), a biologically plausible rule that modifies synaptic weights based on the precise temporal relationship between pre- and post-synaptic spikes. This allows the chip to learn and adapt in real-time to incoming data without requiring offline training.

The unique learning capabilities of DYNAP-ROLLS make it well-suited for applications in adaptive systems that need to learn and adjust their behavior in response to real-world interactions. It has also been successfully employed in pattern recognition tasks, where its on-chip plasticity enables it to learn and classify various input patterns. Furthermore, DYNAP-ROLLS has been utilized to model higher-level cognitive functions, such as working memory and decision-making, through the implementation of hardware attractor networks that leverage the dynamics of recurrently connected spiking neurons. It is worth noting that some sources indicate ROLLS as being in an “End Of Life” or “Discontinued or Unsupported” status , suggesting it may represent an earlier generation in the DYNAP processor lineage. The distinct focus of DYNAP-ROLLS on on-chip learning sets it apart as a valuable platform for investigating biologically inspired learning mechanisms and for developing neuromorphic systems capable of adaptation without relying on external training processes. The application of DYNAP-ROLLS in the creation of hardware attractor networks further highlights its potential for implementing more complex cognitive functions by harnessing the dynamic properties of recurrently connected spiking neurons.

DYNAP-CNN: Optimized for Convolutional Spiking Neural Networks

The DYNAP-CNN variant within the DYNAP family is specifically engineered and optimized for the efficient implementation of Spiking Convolutional Neural Networks (SCNNs), primarily targeting vision processing applications. This processor boasts a significant capacity, featuring up to 1 million configurable spiking neurons and a direct interface for seamless integration with Dynamic Vision Sensors (DVS). In terms of architecture, DYNAP-CNN can support multiple convolutional layers, with some configurations featuring up to nine such layers, along with pooling layers to achieve spatial invariance. Notably, the layers within the DYNAP-CNN architecture can also be configured to operate as dense, fully connected layers, providing flexibility for different network topologies, particularly for final classification stages.

A key feature of DYNAP-CNN is its dedicated interface for directly connecting to event-based sensors like DVS cameras. This direct interface allows for ultra-low latency processing of visual information, as the chip can process the asynchronous event streams generated by the DVS without the need for intermediate conversion or buffering. As a result of its architecture and features, DYNAP-CNN is ideally suited for a range of computer vision tasks, including object recognition, real-time gesture recognition, autonomous navigation in robots and drones, and intelligent surveillance systems, especially in scenarios where power consumption is a critical constraint. To facilitate the development and deployment of applications on DYNAP-CNN, SynSense, the company behind its commercialization, provides development kits and software tools such as SINABS, a Python library for converting deep learning models to SCNNs, and SAMNA, a middleware for interfacing with sensors and visualizing data. DYNAP-CNN represents a specialized approach within the DYNAP family, with a clear focus on accelerating the computations inherent in convolutional neural networks within the spiking domain. This targeted design makes it highly efficient for addressing a wide array of vision-related tasks. The integration of a direct interface for Dynamic Vision Sensors in DYNAP-CNN underscores the powerful synergy between event-based sensing and event-based processing. This combination enables the creation of ultra-low latency and highly power-efficient solutions for a variety of dynamic vision applications.

Comparative Analysis of DYNAP Flavors

The DYNAP family of neuromorphic processors offers a range of options tailored to different computational needs. The following table summarizes the key architectural and functional differences between the three primary flavors: DYNAP-SE, DYNAP-ROLLS, and DYNAP-CNN.

Table 2: Comparison of DYNAP Processor Flavors

| Feature | DYNAP-SE | DYNAP-ROLLS | DYNAP-CNN |

| Approximate Number of Neurons | ~1024 | 256 | Up to 1M |

| Approximate Number of Synapses | ~65k | 128k plastic + 256k programmable | >50G CNN, ~2M fully-connected |

| Primary Neuron Model | AdExpIF | Adaptive Exponential I&F | Integrate-and-Fire |

| On-Chip Learning Capabilities | No direct on-chip learning (plasticity configurable) | Yes (STDP) | No direct on-chip learning |

| Primary Application Focus | General-purpose SNN research, robotics, sensory processing | On-chip learning and adaptive systems, pattern recognition | Convolutional vision processing, low-power vision tasks |

| Analog/Digital Implementation | Mixed-signal | Mixed-signal | Digital |

Diverse Applications and Research with DYNAP Processors

The DYNAP family of processors has been instrumental in a wide array of research projects and applications, demonstrating its versatility across various domains. Beyond the typical applications associated with each specific flavor, DYNAP processors have been utilized in numerous innovative ways. For instance, DYNAP-SE has been employed in robotics for tasks such as achieving smooth and continuous motion in robotic arms through sophisticated trajectory interpolation algorithms. In the realm of sensory processing, DYNAP (likely DYNAP-SE) has been used to develop systems for recognizing and clustering objects based on tactile data obtained from sensor arrays. Researchers have also leveraged DYNAP-SE to investigate auditory processing by configuring its neurons to function as disynaptic delay elements, enabling the study of temporal coding and feature detection. In the biomedical field, DYNAP-SE2 has shown promise in the analysis of biosignals, including the development of systems for the real-time detection of epileptic seizures from EEG recordings. Furthermore, studies have explored the use of DYNAP (again, likely DYNAP-SE) to model complex cognitive functions, such as the sequential memory processes believed to occur in the hippocampus. DYNAP-ROLLS, with its on-chip learning capabilities, has been applied to tasks like real-time image classification using data from spiking vision sensors, showcasing its ability to learn and adapt to visual inputs. Finally, DYNAP-CNN has demonstrated its effectiveness in low-power edge AI applications, including keyword recognition and other tasks requiring efficient processing of sensory data at the network’s edge. The development and deployment of these diverse applications on DYNAP hardware have been significantly facilitated by the availability of specialized software frameworks and tools. Libraries such as Rockpool, SINABS, and SAMNA provide researchers and developers with the necessary abstractions and functionalities to configure, program, and interface with the DYNAP processors, streamlining the process of translating theoretical neural network models into practical hardware implementations. The wide spectrum of applications, spanning robotics, sensory processing, biomedical engineering, and cognitive modeling , highlights the inherent versatility and broad applicability of the DYNAP neuromorphic platform across a multitude of research domains. The creation of specialized software tools and simulators tailored for the DYNAP architecture is a critical factor in making this technology accessible to a wider community of researchers and developers. These resources lower the barrier to entry and empower a broader range of users to effectively harness the capabilities of the platform.

Conclusion and Future Directions

The DYNAP family of neuromorphic processors represents a significant advancement in the field of brain-inspired computing. Rooted in bio-inspired principles and characterized by an event-driven architecture, these processors offer remarkable energy efficiency and versatility across their different flavors. The DYNAP-SE serves as a flexible platform for general-purpose Spiking Neural Network research, the DYNAP-ROLLS enables the exploration of on-chip learning and adaptive systems, and the DYNAP-CNN provides a powerful engine for accelerating convolutional neural networks in the spiking domain, particularly for vision-related tasks. The future of neuromorphic computing holds immense potential, with ongoing advancements expected in hardware design, the sophistication of neuron and synapse models, the development of more powerful learning algorithms, and the refinement of user-friendly software tools. Research and development efforts at INI and by companies like SynSense continue to push the boundaries of neuromorphic technology with the DYNAP platform. Despite the significant progress, challenges remain, including the inherent complexity of configuring these neuromorphic systems, the variability introduced by device mismatch in analog circuits , and the ongoing need for standardized benchmarks and comprehensive development tools. The continued evolution of the DYNAP family, evidenced by the emergence of newer versions like DYNAP-SE2 and DYNAP-CNN , underscores a dynamic and ongoing effort to enhance performance, introduce novel features, and address the existing challenges within the field. The increasing global interest in neuromorphic computing, demonstrated by the development of diverse neuromorphic chips and systems , positions the DYNAP processor as a vital contribution to a broader movement towards brain-inspired computation, holding the potential to revolutionize various fields by offering unprecedented levels of efficiency and biological plausibility.

Sources

- Large-Scale Neuromorphic Spiking Array Processors: A Quest to Mimic the Brain – Frontiers, fecha de acceso: abril 7, 2025, https://www.frontiersin.org/journals/neuroscience/articles/10.3389/fnins.2018.00891/full

- Neuromorphic Hardware Guide, fecha de acceso: abril 7, 2025, https://open-neuromorphic.org/neuromorphic-computing/hardware/

- Neuromorphic artificial intelligence systems – PMC – PubMed Central, fecha de acceso: abril 7, 2025, https://pmc.ncbi.nlm.nih.gov/articles/PMC9516108/

- (PDF) DYNAP-SE2: a scalable multi-core dynamic neuromorphic asynchronous spiking neural network processor – ResearchGate, fecha de acceso: abril 7, 2025, https://www.researchgate.net/publication/377295131_DYNAP-SE2_a_scalable_multi-core_dynamic_neuromorphic_asynchronous_spiking_neural_network_processor

- Gradient-descent hardware-aware training and deployment for mixed-signal Neuromorphic processors – arXiv, fecha de acceso: abril 7, 2025, https://arxiv.org/pdf/2303.12167

- Welcome! | Institute of Neuroinformatics | UZH, fecha de acceso: abril 7, 2025, https://www.ini.uzh.ch/

- DYNAP-SEL: An ultra-low power mixed signal Dynamic Neuromorphic Asynchronous Processor with SElf Learning abilities, fecha de acceso: abril 7, 2025, https://niceworkshop.org/wp-content/uploads/2019/04/NICE-2019-Day-1h-Giacomo-Indiveri.pdf

- Large-Scale Neuromorphic Spiking Array Processors: A Quest to Mimic the Brain – PMC, fecha de acceso: abril 7, 2025, https://pmc.ncbi.nlm.nih.gov/articles/PMC6287454/

- DYNAP™-CNN: Neuromorphic Processor With 1M Spiking eurons – SynSense, fecha de acceso: abril 7, 2025, https://www.synsense.ai/products/dynap-cnn/

- A Look at DynapCNN – SynSense – Neuromorphic Chip, fecha de acceso: abril 7, 2025, https://open-neuromorphic.org/neuromorphic-computing/hardware/dynap-cnn-synsense/

- A Look at ROLLS – INI – Neuromorphic Chip, fecha de acceso: abril 7, 2025, https://open-neuromorphic.org/neuromorphic-computing/hardware/rolls-ini/

- Neuromorphic Chip DYNAP™-SE2 – SynSense, fecha de acceso: abril 7, 2025, https://www.synsense.ai/products/neuromorphic-chip-dynap-se2/

- DYNAP-CNN — the World’s First 1M Neuron, Event-Driven Neuromorphic AI Processor for Vision Processing | SynSense, fecha de acceso: abril 7, 2025, https://www.synsense.ai/dynap-cnn-the-worlds-first-1m-neuron-event-driven-neuromorphic-ai-processor-for-vision-processing/

- Overview — 2.0.2 – Sinabs, fecha de acceso: abril 7, 2025, https://sinabs.readthedocs.io/en/v2.0.2/speck/overview.html

- Time-invariant shape recognition using tactile sensors on neuromorphic hardware (in collaboration with the University of Chicago). – SiROP, fecha de acceso: abril 7, 2025, https://sirop.org/app/d0c3ded3-8ffc-47d6-9a71-f8d4b3169a21?_s=g0u6ZcSPXVZQv-S2&_k=FgiYV5XFk47A31iH&40

- Enabling Efficient Processing of Spiking Neural Networks with On-Chip Learning on Commodity Neuromorphic Processors for Edge AI Systems – arXiv, fecha de acceso: abril 7, 2025, https://arxiv.org/html/2504.00957v1

- Overview of Dynap-SE2 — Rockpool 2.9.1 documentation, fecha de acceso: abril 7, 2025, https://rockpool.ai/devices/DynapSE/dynapse-overview.html

- DYNAP-SE1 introduction — Samna 0.44.0.0+dirty documentation – GitLab, fecha de acceso: abril 7, 2025, https://synsense-sys-int.gitlab.io/samna/devkits/dynapSeSeries/dynapse1/contents/intro.html

- [2303.12167] Gradient-descent hardware-aware training and deployment for mixed-signal Neuromorphic processors – ar5iv, fecha de acceso: abril 7, 2025, https://ar5iv.labs.arxiv.org/html/2303.12167

- Synaptic Delays for Insect-Inspired Temporal Feature Detection in Dynamic Neuromorphic Processors – PMC – PubMed Central, fecha de acceso: abril 7, 2025, https://pmc.ncbi.nlm.nih.gov/articles/PMC7059595/

- Dynap-SE2 developer notes — Rockpool 2.9.1 documentation, fecha de acceso: abril 7, 2025, https://rockpool.ai/devices/DynapSE/developer.html

- The Dynap-se chip | Institute of Neuroinformatics – Universität Zürich, fecha de acceso: abril 7, 2025, https://www.ini.uzh.ch/en/research/groups/ncs/chips/Dynap-se.html

- Towards spiking analog hardware implementation of a trajectory interpolation mechanism for smooth closed-loop control of a spiking robot arm – arXiv, fecha de acceso: abril 7, 2025, https://arxiv.org/html/2501.17172v1

- Synaptic Integration of Spatiotemporal Features with a Dynamic Neuromorphic Processor – DiVA portal, fecha de acceso: abril 7, 2025, https://www.diva-portal.org/smash/get/diva2:1392851/FULLTEXT01.pdf

- The ROLLS chip | Institute of Neuroinformatics – Universität Zürich, fecha de acceso: abril 7, 2025, https://www.ini.uzh.ch/en/research/groups/ncs/chips/ROLLS.html

- YigitDemirag/dynapse-simulator: A minimal and … – GitHub, fecha de acceso: abril 7, 2025, https://github.com/YigitDemirag/dynapse-simulator

- A dynamic neuro-synaptic hardware platform for Spiking Neural Networks, fecha de acceso: abril 7, 2025, https://neurophysics.ucsd.edu/courses/physics_171/A%20dynamic%20neuro-synaptic%20hardware%20platform%20for%20Spiking%20Neural%20Networks.pdf

- Firing patterns in the adaptive exponential integrate-and-fire model – PMC – PubMed Central, fecha de acceso: abril 7, 2025, https://pmc.ncbi.nlm.nih.gov/articles/PMC2798047/

- Adaptive exponential integrate-and-fire model – Scholarpedia, fecha de acceso: abril 7, 2025, http://www.scholarpedia.org/article/Adaptive_exponential_integrate-and-fire_model

- 6.1 Adaptive Exponential Integrate-and-Fire | Neuronal Dynamics online book, fecha de acceso: abril 7, 2025, https://neuronaldynamics.epfl.ch/online/Ch6.S1.html

- Adaptive Exponential Integrate-and-Fire Model as an Effective Description of Neuronal Activity – American Journal of Physiology, fecha de acceso: abril 7, 2025, https://journals.physiology.org/doi/full/10.1152/jn.00686.2005

- DynapSim Neuron Model — Rockpool 2.9.1 documentation, fecha de acceso: abril 7, 2025, https://rockpool.ai/devices/DynapSE/neuron-model.html

- Synaptic Delays for Insect-Inspired Temporal Feature Detection in Dynamic Neuromorphic Processors – Frontiers, fecha de acceso: abril 7, 2025, https://www.frontiersin.org/journals/neuroscience/articles/10.3389/fnins.2020.00150/full

- Toward Silicon-Based Cognitive Neuromorphic ICs—A Survey – The University of Texas at Dallas, fecha de acceso: abril 7, 2025, https://www.utdallas.edu/~gxm112130/papers/dt16.pdf

- Diff-pair integrator synapse. | Download Scientific Diagram – ResearchGate, fecha de acceso: abril 7, 2025, https://www.researchgate.net/figure/Diff-pair-integrator-synapse_fig4_6124045

- A Realistic Simulation Framework for Analog/Digital Neuromorphic Architectures – arXiv, fecha de acceso: abril 7, 2025, https://arxiv.org/html/2409.14918v1

- Low-Power Neuromorphic Chip DYNAP™-SEL – SynSense, fecha de acceso: abril 7, 2025, https://www.synsense.ai/products/neuromorphic-chip-dynap-sel/

- On-chip unsupervised learning in Winner-Take-All networks of spiking neurons – Yulia Sandamirskaya, fecha de acceso: abril 7, 2025, https://sandamirskaya.eu/resources/KreiserEtAl_BioCAS2017.pdf

- Organizing Sequential Memory in a Neuromorphic Device Using Dynamic Neural Fields, fecha de acceso: abril 7, 2025, https://www.frontiersin.org/journals/neuroscience/articles/10.3389/fnins.2018.00717/full

- Pattern recognition with spiking neural networks and the ROLLS low-power online learning neuromorphic processor – DiVA portal, fecha de acceso: abril 7, 2025, http://www.diva-portal.org/smash/get/diva2:1088709/FULLTEXT03.pdf

- Photo of the DYNAP-SE2 chip, which has an area of 98 mm² manufactured… – ResearchGate, fecha de acceso: abril 7, 2025, https://www.researchgate.net/figure/Photo-of-the-DYNAP-SE2-chip-which-has-an-area-of-98mm-manufactured-in-180-nm-CMOS_fig1_377295131

- Giacomo INDIVERI | Professor (Associate) | PhD | University of Zurich, Zürich | UZH | Institute of Neuroinformatics | Research profile – ResearchGate, fecha de acceso: abril 7, 2025, https://www.researchgate.net/profile/Giacomo-Indiveri

- Institute of Neuroinformatics – SiROP – Students Searching Theses and Research Projects, fecha de acceso: abril 7, 2025, https://sirop.org/app/organization/0c3aa371-1ab5-4281-bc9d-066f794ea5ad?_s=Zz-tje5mcO86YOzr&_k=KyeFo7El48GfPRCf&57

- DYNAP-SE2: a scalable multi-core dynamic neuromorphic asynchronous spiking neural network processor – ResearchGate, fecha de acceso: abril 7, 2025, https://www.researchgate.net/journal/Neuromorphic-Computing-and-Engineering-2634-4386/publication/377295131_DYNAP-SE2_a_scalable_multi-core_dynamic_neuromorphic_asynchronous_spiking_neural_network_processor/links/65bb3fab1bed776ae31b1910/DYNAP-SE2-a-scalable-multi-core-dynamic-neuromorphic-asynchronous-spiking-neural-network-processor.pdf

- Training and Deploying Spiking NN Applications to the Mixed-Signal Neuromorphic Chip Dynap-SE2 with Rockpool – ResearchGate, fecha de acceso: abril 7, 2025, https://www.researchgate.net/publication/369449804_Training_and_Deploying_Spiking_NN_Applications_to_the_Mixed-Signal_Neuromorphic_Chip_Dynap-SE2_with_Rockpool

- Overview — 2.0.0 – Sinabs, fecha de acceso: abril 7, 2025, https://sinabs.readthedocs.io/en/v2.0.0/speck/overview.html

- Event Driven Neural Network on a Mixed Signal Neuromorphic Processor for EEG Based Epileptic Seizure Detection – bioRxiv, fecha de acceso: abril 7, 2025, https://www.biorxiv.org/content/10.1101/2024.05.22.595225v4.full.pdf

- Giacomo Indiveri – Person, fecha de acceso: abril 7, 2025, https://services.ini.uzh.ch/admin/modules/uzh/person.php?id=9312

- Editorial: Hardware implementation of spike-based neuromorphic computing and its design methodologies – PMC, fecha de acceso: abril 7, 2025, https://pmc.ncbi.nlm.nih.gov/articles/PMC9854259/

- SENECA: building a fully digital neuromorphic processor, design trade-offs and challenges, fecha de acceso: abril 7, 2025, https://www.frontiersin.org/journals/neuroscience/articles/10.3389/fnins.2023.1187252/full

- Neuromorphic dreaming: A pathway to efficient learning in artificial agents – arXiv, fecha de acceso: abril 7, 2025, https://arxiv.org/html/2405.15616v1

- Giacomo Indiveri (0000-0002-7109-1689) – ORCID, fecha de acceso: abril 7, 2025, https://orcid.org/0000-0002-7109-1689